Institutional Memory in Code: The Case for AI-Powered Legacy Documentation

The Vanishing Knowledge Crisis

In enterprises that rely on legacy systems—especially those built in COBOL, FORTRAN, or other aging languages—code isn’t just software. It’s memory. It encodes decades of operational logic, regulatory compliance adaptations, customer workflows, and business rules. But there’s one major problem: the people who wrote and understand that code are disappearing.

The impending retirement of legacy developers has created a quiet crisis in institutional memory. A 2024 study found that 68% of COBOL developers are expected to retire by 2025, and only a fraction of their system knowledge is formally documented. Much of the critical logic behind loan calculations, claims processing, or logistics workflows exists in tribal form—passed verbally, recorded informally, or trapped within unstructured code comments.

When these developers leave, they take with them not just their skills but the historical context needed to maintain, audit, or modernize legacy applications. The result is operational fragility: new developers can’t safely modify old systems, compliance audits fail due to lack of traceability, and modernization efforts stall under the weight of undocumented dependencies.

Worse yet, the loss of institutional memory doesn’t show up on a balance sheet—until a system fails, an audit is triggered, or a regulatory fine is imposed. Organizations in regulated industries like banking, healthcare, and manufacturing are especially vulnerable. These sectors depend on software systems built 15–40 years ago, yet often can’t explain how those systems really work today.

This silent erosion of system knowledge is no longer sustainable. Preserving institutional memory has become a modernization imperative—and documentation is the critical first step.

Legacy Code as an Information Time Capsule

How decades-old systems silently house mission-critical knowledge

Legacy systems are often dismissed as outdated or inefficient, but they also serve an unexpected role: they are the most complete and unbroken record of how an organization has operated for decades. From eligibility rules in a healthcare provider’s claims system to fraud detection workflows embedded in banking mainframes, these systems capture the operational DNA of an enterprise.

Unfortunately, that record is buried.

Unlike modern systems designed with modular architectures and external documentation, legacy systems are monolithic and opaque. Business rules, data flows, exception handling, and compliance logic are often embedded deep within procedural code—written in languages few understand, without the benefit of comments or architectural diagrams.

These systems are time capsules: they reflect past decisions, market conditions, and regulatory environments, but are sealed in layers of syntax that only veteran developers can decipher. And even they struggle—because much of the knowledge around why the code exists in its current form has long since vanished.

This becomes painfully evident during system failures, compliance audits, or M&A activity. Organizations realize they can’t answer basic questions about how their systems work, what dependencies exist, or what the impact of a change might be. The code “runs,” but nobody knows exactly why or how.

As businesses push toward digital transformation, they must contend with this legacy inertia. Unlocking the value inside these codebases is not just about rewriting them—it’s about understanding them. And that’s where traditional documentation efforts have failed.

The next section explains why.

The Documentation Gap

Why traditional documentation fails—and what it costs

For years, legacy systems have been maintained with the assumption that “as long as it works, don’t touch it.” This mindset led to chronic underinvestment in documentation—viewed as a cost center, not a strategic asset. But as systems age and institutional knowledge erodes, the consequences of that omission become increasingly visible.

Traditional documentation approaches—manual write-ups, static PDFs, out-of-date architecture diagrams—are not built for complexity or change. Worse, they are rarely trusted. Teams often ignore legacy documentation entirely because it doesn’t reflect the current system state. When you’re unsure if the docs are accurate, you revert to trial-and-error debugging or legacy SME consultation—which is exactly what fails when those SMEs retire.

The costs are significant:

- Time Loss: Developers spend an average of 17 hours per week understanding legacy code, amounting to $40,000 in annual labor waste per IT employee.

- Migration Delays: Modernization projects can stall for months because no one can explain what the legacy system actually does.

- Security & Compliance Risks: Without proper documentation, compliance audits become guesswork, and identifying vulnerabilities becomes a forensic exercise.

- Developer Onboarding Bottlenecks: New hires require steep learning curves, reducing team velocity and increasing error rates.

Manual documentation also scales poorly. In large enterprises, a single legacy system can span millions of lines of code, multiple languages, and decades of patchwork fixes. No human team can feasibly document this comprehensively, much less keep it updated.

That’s why AI changes the game.

AI as the Institutional Archivist

How AI can autonomously extract, structure, and preserve system knowledge

What if every undocumented system behavior, obscure business rule, and hidden dependency could be made visible—automatically? That’s the promise of AI-powered documentation.

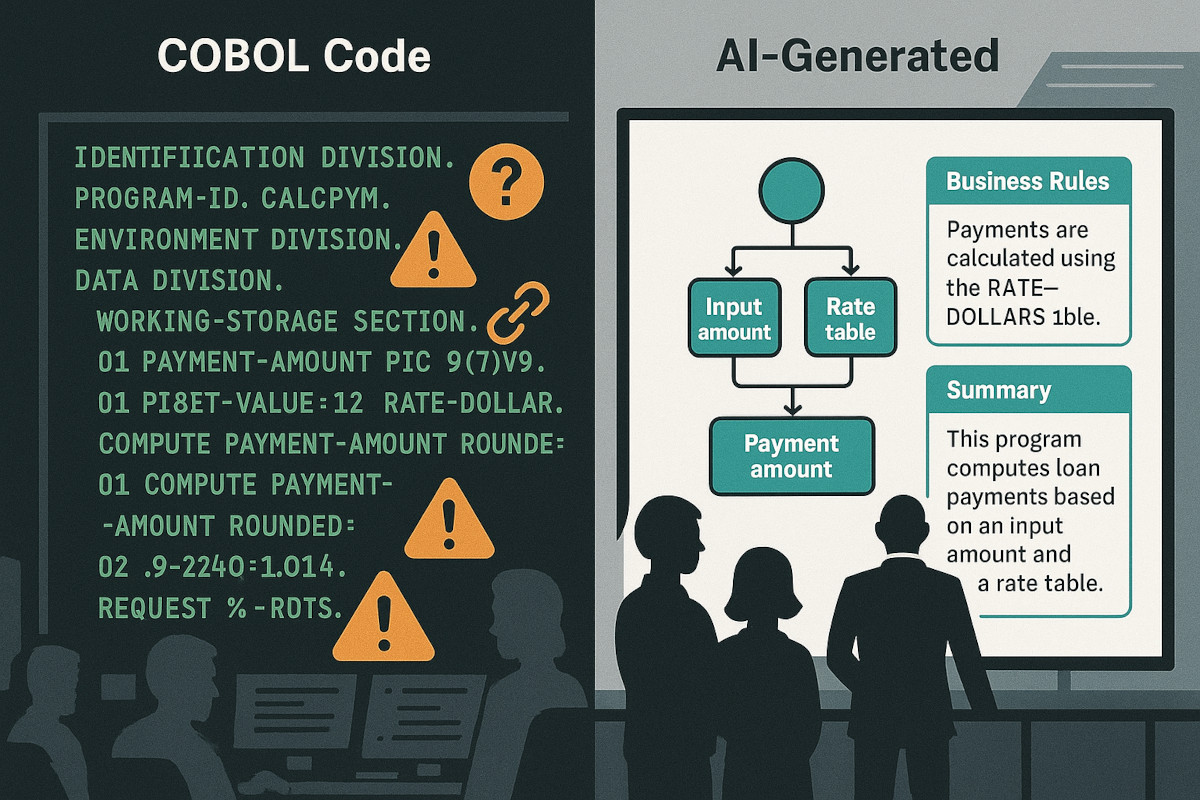

AI can act as an institutional archivist: scanning legacy codebases, understanding patterns, and translating complexity into structured, human-readable knowledge. Unlike traditional methods, AI doesn’t rely on tribal memory or manual input. It uses advanced techniques like natural language processing (NLP), static code analysis, and graph-based dependency mapping to reconstruct system understanding from the code itself.

Here’s how it works:

- Code Parsing & Semantic Understanding: AI tools ingest codebases and detect functions, variable relationships, logic flows, and architectural patterns—even across languages like COBOL, PL/I, or RPG.

- Contextual Explanation: These tools then generate plain-language summaries of what each module, function, or process does, aligned to business terminology.

- Dependency Graphing: AI can map system interconnections—file calls, API links, data lineage—often uncovering undocumented or hidden links that present risk.

- Live Documentation: Unlike static documentation, AI-generated knowledge can update in real-time as the system evolves, ensuring that institutional memory is preserved going forward.

Platforms like CodeAura take this a step further by embedding AI into the software development lifecycle. Their AI assistant, Elliot, doesn’t just generate documentation—it answers system-level questions in real time, supports code generation based on existing logic, and even bridges the gap between technical and non-technical users through a unified knowledge interface.

The benefits are profound:

- Documentation coverage at scale

- Faster onboarding for new teams

- Reduced modernization risks

- Improved audit readiness and compliance traceability

In essence, AI transforms legacy systems from black boxes into transparent, navigable assets—laying the groundwork for modernization without guesswork.

Beyond Compliance: Documentation as a Strategic Asset

How modern documentation supports auditability, onboarding, and scalability

For decades, documentation was seen as a checkbox item—something you completed at the end of a project, if time allowed. But in today’s hyper-regulated, talent-constrained, and innovation-driven environment, documentation is more than a record—it’s a lever.

Here’s why AI-powered documentation is now a strategic asset:

Auditability & Compliance Readiness

In regulated sectors like banking, healthcare, and manufacturing, audit trails are mandatory. Yet 63% of legacy CRM and transaction systems lack the traceability features needed for GDPR, HIPAA, or PCI DSS compliance.

Modern documentation systems offer:

- Automatic trace logs of code changes

- Explanation of logic flows aligned to regulatory frameworks

- Pre-built compliance mappings (e.g., Basel IV, HIPAA 2025, DORA)

This doesn’t just help during audits—it prevents fines, accelerates certification, and strengthens institutional trust.

Accelerated Onboarding & Knowledge Transfer

The skills crisis is real: 68% of COBOL developers will retire by 2025, and 40% of their knowledge is undocumented. AI-generated documentation becomes a living guidebook, reducing dependency on veteran staff and accelerating ramp-up time for new hires.

Benefits include:

- 50–70% reduction in onboarding time

- Instant access to plain-language explanations of legacy logic

- Reduced risk of introducing bugs during code changes

Platform Scalability & Operational Continuity

As organizations evolve, documentation ensures systems don’t become bottlenecks. Whether migrating to the cloud, integrating new digital platforms, or scaling across regions, clear documentation:

- Reveals interdependencies and potential failure points

- Enables seamless handoffs between internal and external teams

- Supports DevOps, SRE, and CI/CD practices

In other words, documentation is no longer a technical luxury. It’s a business continuity requirement and an enabler of growth.

Implementation Blueprint: From Legacy Code to Living Documentation

Practical steps for integrating AI-powered documentation into modernization programs

Shifting from undocumented legacy code to a living, AI-driven knowledge base isn’t a moonshot—it’s a process. The most successful organizations treat AI-powered documentation not as a bolt-on, but as a foundational layer of modernization.

Here’s how to do it:

Step 1: Inventory Your Codebase

Start with a system-wide scan:

- Identify core legacy applications (COBOL, RPG, PL/I, etc.)

- Map codebase sizes, age, and known pain points

- Tag high-risk systems with compliance or business continuity dependencies

Tip: Prioritize systems with 2M+ LOC, 10+ years of age, and no recent documentation. These are the most critical and the most at-risk.

Step 2: Deploy AI Documentation Engines

Use AI tools (like CodeAura’s platform or similar) to ingest your code and generate initial insights:

- Generate plain-language summaries of functions, modules, and flows

- Build visual dependency maps

- Extract embedded business logic, exception handling, and data lineage

Output: Within 24–72 hours, you’ll have a navigable knowledge base with documentation coverage of 60–80%.

Step 3: Align With Stakeholders

Bring in:

- Compliance teams to validate regulatory mappings

- Dev leads to evaluate documentation accuracy

- Security teams to flag undocumented risk zones

Objective: Build trust in the AI outputs and identify which parts need human validation.

Step 4: Integrate Documentation Into Daily Workflows

Embed the living documentation into:

- CI/CD pipelines (auto-update docs on code commit)

- Developer portals (for real-time reference)

- Audit systems (as part of change control reviews)

Result: Documentation evolves as the codebase does—without requiring manual upkeep.

Step 5: Use It As a Launchpad for Modernization

Once documented, legacy systems can be:

- Refactored incrementally

- Wrapped in APIs or microservices

- Migrated with confidence using AI-generated risk assessments

Outcome: Documentation becomes the bridge from legacy to modern—not a side task, but the roadmap itself.

Conclusion: Rebuilding Trust in Institutional Knowledge

A future where systems document themselves—and the business benefits that follow

For too long, enterprises have treated documentation as an afterthought. But as legacy systems become harder to understand, maintain, and modernize, the absence of institutional knowledge is no longer a minor inconvenience—it’s a strategic liability.

AI offers a turning point. By transforming legacy code into clear, contextual documentation, AI tools like CodeAura don’t just reduce risk—they restore trust. Trust that systems are understandable. Trust that new developers can onboard quickly. Trust that compliance can be proven, and change can be made without fear of collapse.

This isn’t just about technical clarity—it’s about business resilience. Enterprises that embrace AI-powered documentation build a living knowledge base that evolves alongside their systems. They stop relying on the memory of a few and start institutionalizing the wisdom of many.

And perhaps most importantly, they unlock the true potential of modernization—not as a disruptive threat, but as an informed, agile transition toward a smarter, faster, and more secure future.

In an era where knowledge is power, institutional memory in code is too valuable to lose. With AI, we don’t have to.

Let’s Talk About Your Mainframe Documentation Needs — Schedule a session with CodeAura today.