Why Modernization Needs to Stay Local: The Case for On-Prem AI in COBOL Transformations

The Legacy Burden: Why COBOL Still Anchors Critical Infrastructure

Despite being over six decades old, COBOL remains a foundational technology in banking, healthcare, insurance, and government systems. These industries rely on COBOL not because they resist change, but because the language underpins mission-critical workloads with decades of embedded business logic. It’s not unusual for a single COBOL application to handle billions of dollars in transactions, manage patient eligibility, or determine pension entitlements — all within frameworks that have evolved slowly and conservatively to ensure reliability.

For CIOs and CTOs in regulated sectors, this legacy presence poses a unique challenge. The risk of modernization failure is not just technical—it’s institutional. Disruptions to COBOL-based systems can mean halted insurance claims, delayed financial settlements, or compromised citizen services. As a result, these systems aren’t just software—they’re operational backbones.

What’s more, the knowledge embedded in COBOL applications is often poorly documented, and key developers are retiring. Modernization is not optional; it’s urgent. But to be successful, it must preserve the integrity of what works while enabling transformation. This is where the deployment environment of AI becomes pivotal. You can’t risk sending core logic or sensitive data to the cloud to accelerate modernization. The AI has to come to the system—not the other way around.

When Data Can’t Leave: Understanding the Gravity of On-Prem Systems

In regulated sectors, COBOL applications are tightly bound to the data they process — and that data is often immovable. This concept, known as data gravity, is especially pronounced in industries like banking and healthcare, where systems were designed for on-premise environments decades before cloud computing existed.

These data sets are massive, complex, and regulated. Extracting them or replicating them in cloud environments isn’t just expensive — it’s often legally impermissible. Core banking systems, for instance, might include decades of transaction histories and customer PII. Healthcare platforms contain sensitive patient records protected under HIPAA and similar regulations worldwide. Insurance claims systems may include unstructured notes, scanned documents, and case histories that require careful handling.

What results is a modernization paradox: organizations want to leverage AI for tasks like code understanding, documentation, and transformation, but cannot expose the data those AI models need. Sending source code and metadata to an external LLM may violate compliance policies and security protocols.

This is why on-prem modernization capabilities are no longer a convenience—they’re a necessity. AI has to operate in the same secure environment as the legacy systems, accessing and reasoning over code and data without moving it outside the firewall. And that requires rethinking how AI infrastructure is deployed in the enterprise.

Compliance at the Core: Regulatory Barriers to Cloud AI

For enterprises governed by strict data protection mandates, modernization efforts must operate within a labyrinth of regulatory constraints. HIPAA, GDPR, DORA, FedRAMP, and Basel IV each place explicit limits on where and how sensitive data can be processed — and nearly all of them present challenges for cloud-based AI.

HIPAA mandates auditable control over access to personal health information, effectively ruling out public cloud inference for LLMs that might ingest, cache, or log sensitive content. GDPR and its global counterparts extend those constraints with strict rules on data residency and the right to erasure — something that’s fundamentally incompatible with LLM architectures that train or fine-tune on user-provided input. DORA and Basel IV add additional layers, requiring demonstrable operational resilience and full transparency of third-party dependencies in financial services infrastructure.

These aren’t abstract legal concerns. A modernization initiative that violates these mandates can result in blocked deployments, regulatory penalties, or in extreme cases, revoked licenses. Even with assurances of data isolation or “no storage” policies, cloud-native AI services often operate in ways that are opaque to the client. Logs, telemetry, and model feedback loops introduce unpredictable compliance risks.

In this climate, AI must be treated as a first-class citizen of the compliance architecture. On-premise AI infrastructure — from LLMs to vector search engines — provides the control and auditability needed to modernize securely, aligning innovation with governance rather than working around it.

The Hidden Dangers of External AI: Leakage, Latency, and Loss of Control

Modernization fueled by cloud-based AI may appear attractive in terms of convenience and scalability, but the hidden risks are substantial — and in regulated environments, often unacceptable.

First is the issue of model leakage. Even if external LLMs claim not to store inputs, the underlying mechanics of many SaaS-based AI tools involve telemetry, caching, or feedback loops that can inadvertently retain schema logic, proprietary data structures, or even snippets of business logic. In COBOL-heavy systems, this could mean exposing how financial transactions are authorized or how patient eligibility is determined — effectively broadcasting the DNA of mission-critical systems to a third party.

Latency is another operational hazard. Public cloud AI services operate on shared infrastructure with variable performance. When code transformation or semantic search depends on deterministic response times — as it must during CI/CD processes or audit reviews — unpredictable delays introduce workflow friction and reliability concerns. Worse, this variability can mask performance regressions or obscure the root cause of transformation errors.

Then there’s cost volatility. With usage-based pricing models, AI services that seem low-cost at pilot scale can generate runaway expenses in full production. This is especially problematic when inference operations are embedded into day-to-day engineering and compliance workflows — compounding risk through budget unpredictability.

Ultimately, cloud AI introduces a triple threat: loss of control over sensitive inputs, non-deterministic behavior that undermines trust, and escalating costs that strain modernization budgets. It’s a model misaligned with the principles of security, stability, and strategic planning that guide enterprise IT in regulated sectors.

Building AI Where the Data Lives: The Case for On-Premise Intelligence

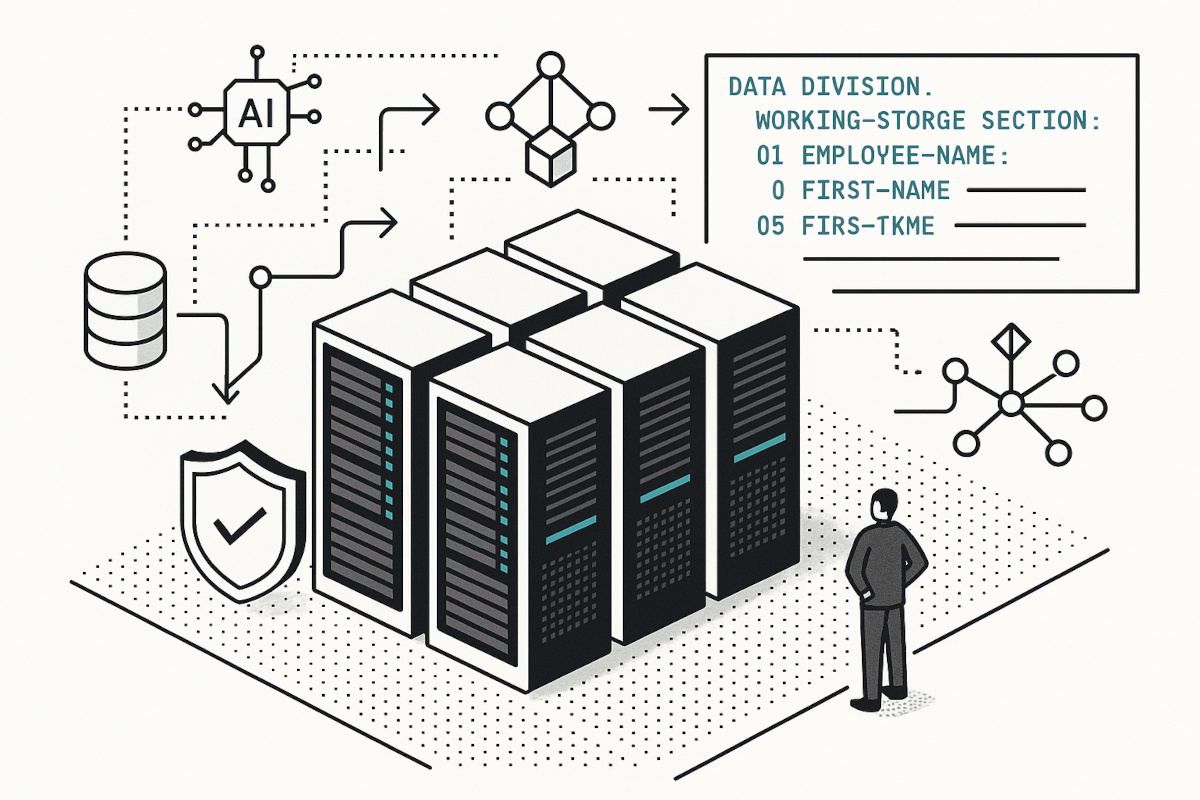

To modernize COBOL systems without compromising compliance or operational integrity, AI must be deployed within the enterprise’s own data center — directly adjacent to the systems and data it needs to understand. This shift isn’t just about security; it’s about alignment. On-premise AI brings the intelligence to the source, allowing enterprises to apply machine learning and natural language processing without extracting or replicating sensitive information.

Local LLMs and vector databases operate entirely within the organization’s controlled environment. This enables precise, policy-governed access to source code, configuration files, and documentation — the critical inputs for code understanding and refactoring. Because the infrastructure is physically and logically isolated, enterprises can enforce deterministic behaviors: fixed latency, known compute resources, and full traceability of every inference operation.

This level of control also supports rigorous versioning, rollback, and reproducibility — key requirements for regulated development environments. If a code change generated by AI is questioned in an audit or compliance review, teams can reproduce the exact sequence that led to the output. That’s simply not possible when inference happens in a shared, opaque cloud model.

Moreover, local AI enables real-time semantic search over proprietary assets — such as legacy codebases, technical documentation, and mainframe logs — without risking exposure. This capability is especially transformative in modernization workflows, where engineers need to surface forgotten logic patterns, compliance-relevant annotations, or undocumented dependencies buried deep in decades-old systems.

In short, on-prem AI isn’t a concession — it’s a strategic enabler. It brings modernization to the doorstep of legacy infrastructure, respecting both the technical realities and the regulatory boundaries that cloud-native models often breach.

Private AI in Action: How Local LLMs Transform Legacy Codebases

Local large language models (LLMs) do more than safeguard data — they actively accelerate and de-risk the modernization of legacy systems. When deployed on-prem, these models become embedded collaborators in refactoring, documentation, and compliance auditing — operating with the same security posture as the systems they serve.

One of the most immediate benefits is code understanding. With access to the full source repository, local LLMs can parse COBOL programs end-to-end, generate function-level summaries, map interdependencies, and flag obsolete or redundant logic — all without data ever leaving the premises. This capability shortens discovery cycles, reduces manual effort, and increases confidence in planning large-scale transformations.

Semantic search further enhances this process. Local vector databases can index not just code, but comments, changelogs, and mainframe documentation. Engineers can query the system with natural language questions like “Where is eligibility logic defined for Medicaid Part B?” and receive pinpointed results — a task that would be impossible with conventional regex or keyword search.

Refactoring also becomes more systematic. On-prem LLMs can generate modern equivalents of COBOL routines in Java, C#, or other target languages, while aligning with internal coding standards and architectural constraints. Since all inference runs locally, teams maintain full audit trails and can validate every transformation before it enters production.

Crucially, these capabilities extend beyond code. Local AI can assist with regulatory documentation, impact analysis, and audit readiness — embedding intelligence into every layer of the modernization workflow.

In essence, private AI doesn’t just support legacy transformation — it becomes the engine of it. And because it operates within the organization’s walls, it does so with the trust, transparency, and control that regulated enterprises require.

Why CodeAura’s Stack Stands Apart: A Secure Future for Regulated Modernization

While many vendors tout AI-enabled modernization, few can deliver solutions that meet the stringent requirements of regulated industries. CodeAura is different. At the heart of its offering is a fully private AI stack — purpose-built for secure, on-prem deployment in environments where data cannot be compromised.

This stack includes a locally hosted large language model fine-tuned for legacy languages like COBOL, paired with an enterprise-grade vector search engine that indexes source code, documentation, and system logs entirely behind the firewall. Unlike cloud-native competitors, CodeAura’s tools never transmit or log data externally, ensuring zero exposure to third-party telemetry or inference leakage.

The architecture is modular and deterministic. Enterprises have full control over model versions, inference pipelines, hardware allocation, and security policies. Whether integrating into existing CI/CD flows or standing up in air-gapped environments, CodeAura’s system adapts to the constraints and compliance postures of banking, healthcare, and government institutions.

Critically, CodeAura goes beyond technical capabilities. Its solutions are designed to support the full lifecycle of legacy system transformation — from automated code summarization and refactoring to semantic search, documentation generation, and audit support. All of this happens locally, giving enterprises the assurance that every AI-generated insight can be traced, validated, and governed.

This strategic alignment of capability and control is what sets CodeAura apart. It doesn’t ask organizations to compromise on compliance to modernize. It enables them to modernize precisely because it was built with compliance in mind.

A Strategic Imperative: Making AI Work Within the Walls of Compliance

For CIOs, CTOs, and CISOs navigating legacy modernization, the message is clear: artificial intelligence must conform to the enterprise — not the other way around. In regulated industries, this means deploying AI within the organization’s existing boundaries of control, not outsourcing core modernization logic to opaque third-party platforms.

On-premise AI is no longer a niche preference; it is a strategic requirement. It ensures that modernization efforts remain aligned with compliance mandates, data sovereignty laws, and institutional risk thresholds. More importantly, it allows enterprises to move forward confidently — extracting value from decades of COBOL investment without exposing themselves to the operational and regulatory uncertainties of cloud-native AI.

CodeAura embodies this shift. By delivering a private AI stack designed for on-prem environments, it gives regulated organizations the tools they need to modernize securely, transparently, and on their own terms. Whether it’s generating compliant documentation, refactoring codebases, or enabling internal semantic search, CodeAura’s approach turns AI into an asset that works within — not around — the constraints of regulated IT.

In a landscape where digital transformation and regulatory scrutiny are increasing in parallel, this approach isn’t just prudent. It’s essential.

Let’s Talk About Your COBOL Documentation and Modernization Needs — Schedule a session with CodeAura today.