Forgotten but Critical: Why PL/I Systems Still Matter — and How AI is Helping Document the Undocumented

The Quiet Persistence of PL/I in Modern Infrastructure

Despite being largely absent from modern developer conversations, PL/I remains embedded in the digital core of many regulated industries. First developed in the 1960s to unify scientific and business computing, PL/I found its niche in high-assurance environments that demanded versatility, durability, and tight integration with mainframe ecosystems. Today, it still underpins critical operations across government agencies, defense contractors, telecom networks, and utility providers.

These systems are not merely historical artifacts. They continue to process payroll, route electricity, manage logistics, and store sensitive citizen and customer data. In many cases, they are intertwined with Job Control Language (JCL), DB2 databases, and IMS transaction managers—forming tightly coupled environments that are functionally stable but practically opaque.

What makes PL/I particularly sticky is its role as a “middle language”: more powerful than COBOL in terms of syntax and logic control, yet less mainstream than C or Java. This has contributed to its survival in niche yet mission-critical domains where the cost of failure is high and the incentive to “rip and replace” is low.

Yet as reliable as PL/I systems have been, their very persistence now poses a risk. Few IT leaders have line of sight into what these programs actually do. Most source code has not been touched in years. And with every retirement, more institutional knowledge about these environments quietly disappears. Understanding the scope of this legacy footprint is the first step toward managing it strategically.

A Legacy of Complexity: The Hybrid Nature of PL/I Systems

PL/I’s design ambition—to serve both scientific computation and business data processing—resulted in a language that defies simple categorization. It supports structured programming, exception handling, direct I/O operations, and complex data structures. Over time, organizations customized and extended PL/I codebases, embedding business logic, data manipulation, and error-handling mechanisms that are now nearly impossible to unravel without deep domain expertise.

This hybrid character becomes even more challenging when paired with the broader mainframe environment. PL/I programs often call or are called by COBOL modules. They interact with DB2 and IMS databases, rely on JCL scripts to orchestrate batch jobs, and pass data through bespoke file formats with little to no schema. The result is an ecosystem that is not just legacy—it’s entangled.

Unlike more modular, modern architectures, PL/I systems were built in a monolithic fashion. Over decades, updates were layered on top of one another with little regard for future maintainability. Control flow is not always linear, dependencies are often undocumented, and condition handling can mask logic paths that even seasoned engineers struggle to trace.

This structural complexity isn’t just a technical challenge—it’s a barrier to understanding business processes that are still in active use. Without a clear map of how these systems behave, organizations can’t assess risk, plan migrations, or even ensure compliance. The hybrid nature of PL/I is both a strength and a vulnerability—and it’s one that most enterprises are no longer equipped to manage manually.

Institutional Memory Loss: The Hidden Threat to Operational Continuity

The most insidious threat facing PL/I-based systems today isn’t technological—it’s human. Over decades, the knowledge required to understand, maintain, and evolve these systems has gradually walked out the door. As veteran developers retire or move on, their deep familiarity with business rules, exception paths, and undocumented shortcuts disappears with them.

What remains is often a brittle set of core applications with little to no internal documentation. Change logs are incomplete. Functional specifications are outdated or lost. Even source control may be missing or non-existent for large portions of the codebase. In many organizations, no one can fully explain what the PL/I systems are doing, why they behave a certain way, or what would break if a single module were altered.

This institutional memory loss has serious operational consequences. It hampers incident response when systems fail. It delays audits when regulators request data lineage or logic traceability. It prevents CIOs from confidently planning modernization roadmaps because the cost, complexity, and dependencies of the legacy systems are largely unknown.

Perhaps most dangerously, it fosters a false sense of security. Just because a PL/I program has “always worked” doesn’t mean it’s safe to ignore. Without a living understanding of how these systems operate—and how they relate to upstream and downstream functions—organizations are effectively flying blind. The business logic that powers critical services has become a black box, and no strategic leader can afford to let that persist.

Why Traditional Documentation Efforts Fall Short in PL/I Environments

Efforts to manually document PL/I systems often start with good intentions—but they rarely achieve lasting impact. Traditional methods, such as static code reviews, interviews with remaining SMEs, or reverse engineering tools designed for COBOL or Java, fall flat when applied to PL/I’s idiosyncrasies. The language’s unique blend of procedural constructs, condition handling, and embedded data definitions defies linear documentation techniques.

Complicating matters further, many PL/I systems evolved organically over decades. A single program might contain thousands of lines of interleaved business logic and system-level operations, with no clear separation of concerns. Flow control is often hidden in GOTO-heavy logic. Variable scope can shift dynamically. Side effects are common. Even seasoned programmers struggle to trace what a given block of code is actually doing, especially when the documentation—if it exists—is sparse or obsolete.

Moreover, static documentation cannot keep pace with change. Even small updates, like altering a file format or reconfiguring a JCL job, can ripple unpredictably across dependent modules. Without automation, documentation becomes a snapshot—quickly outdated, difficult to validate, and rarely trusted by engineers or auditors.

This is not a tooling problem alone—it’s a cognitive load problem. Human analysts can’t scale to the size, complexity, and obscurity of most PL/I ecosystems. What’s needed is a new approach that combines language understanding with pattern recognition and contextual inference. This is where AI enters the picture—not as a replacement for domain experts, but as an accelerator for rediscovery and documentation.

AI as an Archaeologist: Rediscovering Business Logic in Legacy Code

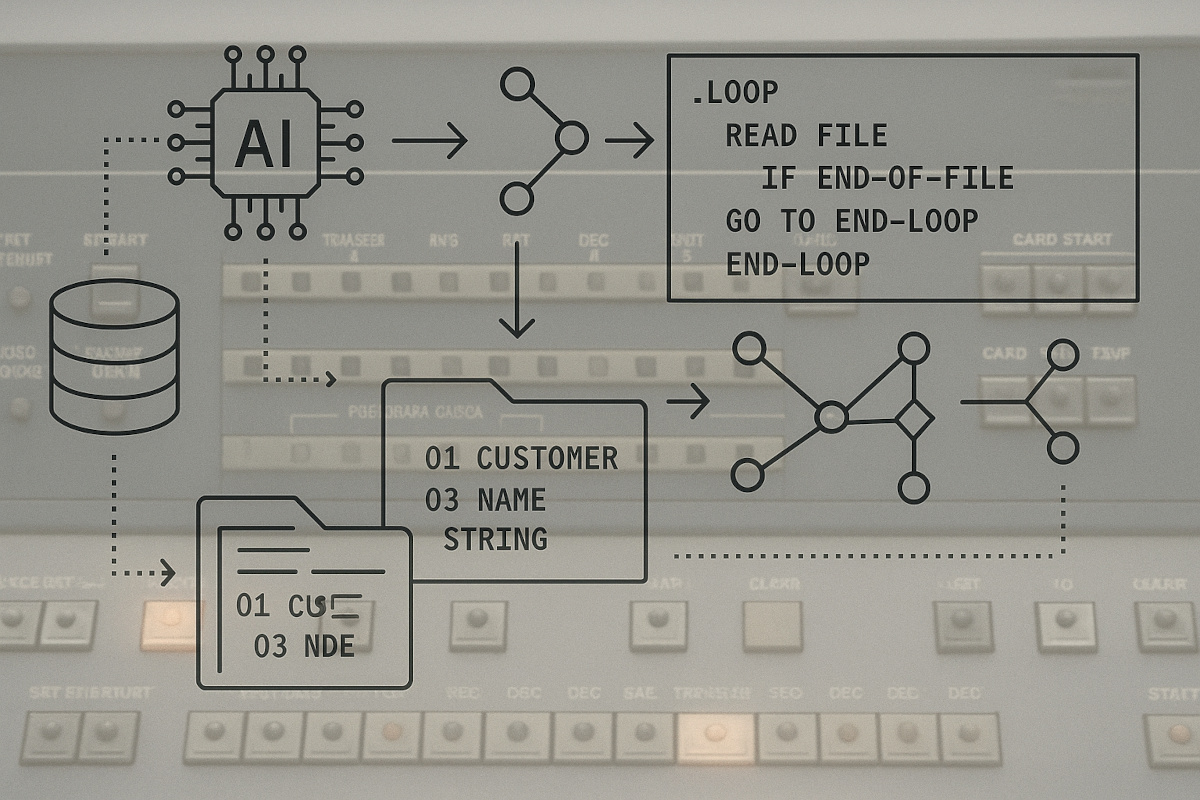

Artificial intelligence—particularly large language models (LLMs)—is reshaping how organizations approach the rediscovery of legacy systems like PL/I. Unlike traditional tools, AI doesn’t rely on hardcoded rules or shallow pattern matching. It learns from vast datasets, infers intent, and identifies recurring architectural and procedural patterns. This makes it uniquely suited to excavate business logic buried in decades-old codebases.

LLMs can parse PL/I source code and recognize semantic structures that map to common business functions—validations, calculations, file handling, and data transformations—even when those functions are undocumented or inconsistently implemented. They detect cross-module dependencies, generate call graphs, and highlight points of interaction with JCL, DB2, or IMS layers. The result is a clearer picture of how a PL/I application behaves in practice, not just in theory.

More importantly, AI tools can surface what matters to both developers and executives. For engineers, they provide data flow diagrams, control flow charts, and code complexity scoring. For business stakeholders, AI translates technical routines into readable summaries that describe what a program does and why it matters—turning opaque logic into understandable intent.

This capability goes beyond mere documentation. It enables faster onboarding for new teams, more accurate impact analysis for proposed changes, and better compliance reporting in regulated sectors. AI acts as a digital archaeologist—systematically uncovering the logic, relationships, and risks hidden in legacy code, and presenting them in ways that bridge the technical-business divide.

From Code to Clarity: Translating PL/I for Technical and Business Stakeholders

One of the most powerful contributions AI brings to PL/I modernization is translation—not across languages, but across roles. In most enterprises, there’s a gap between those who write or maintain code and those who make strategic decisions based on what that code enables. For PL/I systems, this gap is especially wide—and dangerous.

AI-driven tools close this gap by turning opaque, low-level logic into digestible business narratives. Using natural language processing, they can describe what a PL/I module does in plain English: “calculates monthly pension benefits based on service years and contribution history,” or “validates account status before initiating wire transfer.” This translation empowers business analysts, compliance officers, and risk managers to finally understand the systems they rely on.

For technical teams, AI goes further—producing artifact-level insights like data lineage maps, I/O flowcharts, and logic segmentation that reveal where complexity or fragility hides. It identifies redundant functions, undocumented routines, and spaghetti code that poses long-term risk. These outputs help modernization teams prioritize what to refactor, what to replatform, and what to retire.

This isn’t just about visibility. It’s about building a shared vocabulary between IT and the business. When everyone—from developers to CFOs—can talk about legacy systems in terms of function, value, and risk, the organization becomes significantly more aligned and agile. That alignment is essential not just for documentation, but for the strategic modernization that follows.

The CodeAura Edge: Intelligent Documentation for PL/I Ecosystems

While many tools claim to support legacy environments, few offer deep, purpose-built capabilities for PL/I. CodeAura stands apart by delivering intelligent documentation specifically optimized for the hybrid realities of mainframe ecosystems—where PL/I often interacts with COBOL, JCL, and complex data stores like DB2 and IMS.

At the core of CodeAura’s offering is an AI engine trained to parse and understand PL/I in all its variants. It doesn’t just analyze syntax—it interprets logic flow, uncovers implicit dependencies, and scores code modules for maintainability and risk. This allows organizations to instantly identify undocumented functions, assess code complexity, and flag areas of concern—without relying on outdated SME knowledge or months of manual effort.

Crucially, CodeAura automates documentation generation across technical and executive levels. It produces structured outputs like call graphs, data flow diagrams, and component maps. It also generates plain-language summaries of program behavior, offering context-aware explanations that help stakeholders—from developers to compliance leads—grasp how a system operates and why it matters.

Beyond documentation, CodeAura supports strategic planning. Its insights enable teams to triage legacy applications, model modernization scenarios, and prioritize investments based on complexity, risk exposure, and business value. For regulated industries, this means faster audit readiness, clearer impact assessments, and fewer surprises when migration begins.

In a world where legacy systems are no longer understood, CodeAura provides not just clarity—but confidence.

Reclaiming Control: Modernization Begins with Knowing What You Have

For too long, PL/I systems have existed in the shadows—functioning, but not fully understood. As regulatory scrutiny tightens, cyber risks grow, and workforce expertise dwindles, this status quo is no longer sustainable. The path to modernization doesn’t begin with a rewrite or a rip-and-replace strategy. It begins with reclaiming knowledge.

Understanding what your legacy systems do—down to the business logic they execute and the data they move—is the foundation of every responsible IT decision. Without this insight, modernization is guesswork. With it, organizations can make strategic calls: which modules to refactor, which to replatform, and which to retire. They can also meet compliance requirements with confidence, defend against audit findings, and quantify the operational risk of doing nothing.

This is where AI and platforms like CodeAura are transformative. They don’t just shine a light on forgotten code; they provide the tools to act on what’s discovered. They accelerate the documentation process, surface hidden dependencies, and make legacy systems legible again—technically and operationally.

For leaders in sectors like government, utilities, telecom, and defense, this isn’t optional. It’s a prerequisite for resilience. Reclaiming control over PL/I systems isn’t just a technical imperative—it’s a strategic one. The sooner enterprises understand what they have, the sooner they can chart a course to what’s next.

Let’s Talk About Your PL/I Documentation and Modernization Needs — Schedule a session with CodeAura today.