Modernization in Motion: Using Multi-Agent AI Systems to Transform Legacy Codebases

The Complexity Crisis: Why Legacy Modernization Still Fails

Enterprise modernization initiatives often begin with ambition and end in attrition. For large organizations—especially those in regulated sectors like banking, healthcare, and manufacturing—legacy systems are not just outdated; they are deeply embedded in business-critical operations. These systems, often running on COBOL, PL/I, or other monolithic architectures, contain decades of logic, tribal knowledge, and undocumented interdependencies. Despite their fragility, they remain indispensable.

Why do modernization efforts struggle? In many cases, the challenge is underestimated. Modernization is rarely a lift-and-shift exercise. It requires deep code comprehension, business rule extraction, architecture redesign, and end-to-end validation—all under the constraints of regulatory compliance. The process is further complicated by teams grappling with incomplete documentation, high turnover, and tools that offer little more than superficial code translation.

Traditional AI approaches have been deployed to address this, but they often rely on single-model inference—where one monolithic AI model attempts to handle everything from parsing to rewriting to validating code. These approaches may work in narrow scopes but falter under real-world enterprise complexity. They lack explainability, fail at edge cases, and introduce risks that no CIO or CRO can comfortably ignore.

The failure isn’t in the ambition to modernize—it’s in the architecture of the solution. What’s needed is a more modular, scalable, and auditable way to modernize: one that mirrors how real engineering teams function, with specialized roles, collaboration, and traceability. That’s where multi-agent AI systems come in.

From Lone Wolves to Task Forces: The Rise of Multi-Agent AI

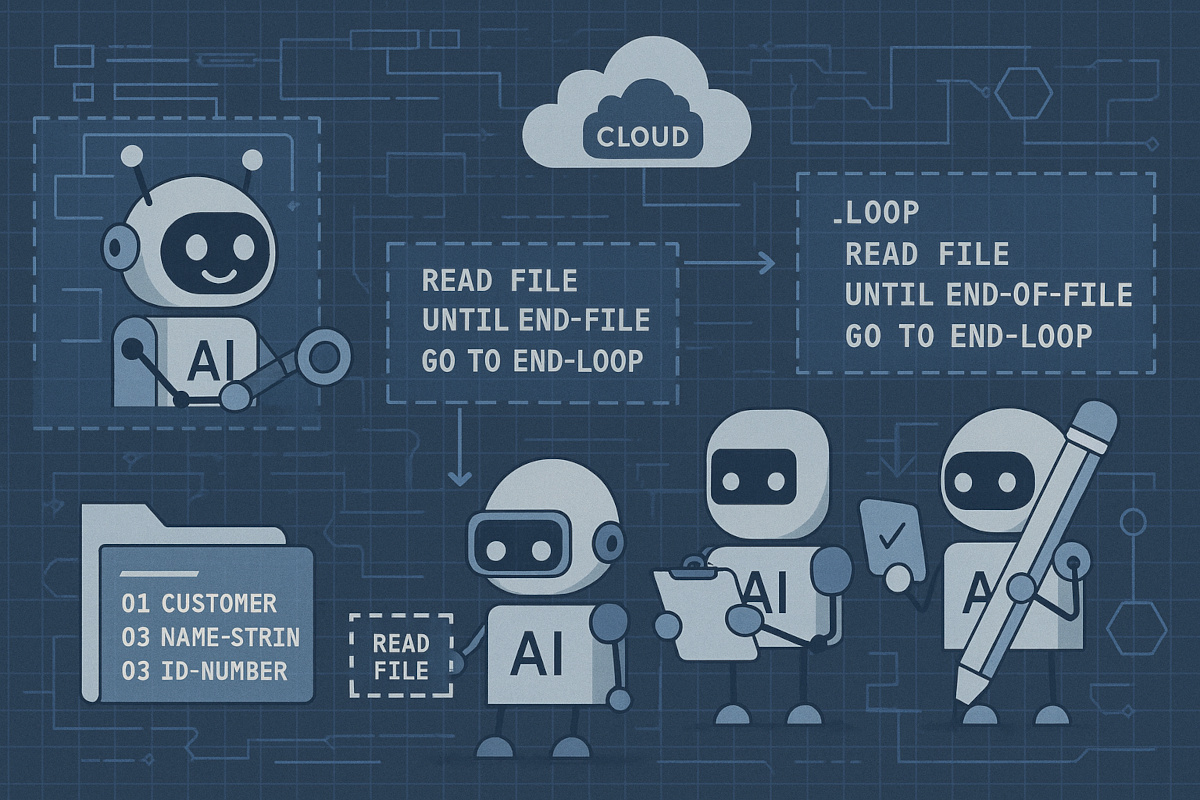

The core breakthrough behind multi-agent AI systems lies in how they reframe the modernization challenge—not as a single monolithic problem, but as a set of discrete, specialized tasks that can be handled by autonomous agents working in coordination.

Each agent in a multi-agent system operates with a defined role and objective. For legacy modernization, this could mean one agent focuses solely on parsing COBOL code and generating an abstract syntax tree (AST), while another interprets business logic embedded within conditional branches. A third agent might translate this logic into modern programming constructs, while a fourth validates the outputs against existing test cases or generates new ones. A fifth might synthesize technical and compliance documentation for auditors and developers alike.

Technologies such as LangGraph, AutoGen, and CrewAI now make it possible to orchestrate these agents as part of a coherent workflow. Agents communicate using standardized protocols or shared memory structures, enabling coordination without collapsing into chaos. This design mirrors how real-world DevOps and development teams operate—through distributed responsibility, peer validation, and iterative refinement.

The advantage of this modularity is profound. When agents are narrowly scoped, their performance can be optimized, monitored, and even replaced without affecting the entire system. This also makes the system more maintainable and auditable—crucial for organizations under regulatory scrutiny.

In essence, multi-agent systems don’t replace human developers—they give them a scalable, intelligent task force that can operate continuously, document its reasoning, and adapt to new rules and requirements. Modernization, once a daunting overhaul, becomes a manageable, incremental transformation.

Breaking Down the Agents: A Role-Based Approach to Code Transformation

Effective legacy modernization demands more than brute-force code conversion—it requires a nuanced understanding of the system’s structure, semantics, and operational context. Multi-agent AI architectures enable this by assigning specific responsibilities to dedicated agents, each focused on a discrete stage of the modernization pipeline. Here’s how a typical role-based agent model works in practice:

- Agent A: The Parser – This agent ingests source code in COBOL, PL/I, RPG, or other legacy languages and constructs a detailed Abstract Syntax Tree (AST). It also maps dependencies, file structures, and inline comments, serving as the foundation for all downstream tasks.

- Agent B: The Rule Extractor – Using the AST and control flow, this agent identifies embedded business logic—such as pricing algorithms, eligibility criteria, or compliance workflows. It captures implicit rules that are often undocumented, helping preserve business intent during transformation.

- Agent C: The Rewriter – With a clear understanding of the legacy logic, this agent generates modern code—typically in Java, JavaScript, or other enterprise-ready languages. It applies best practices such as modularization, dependency injection, and microservice compatibility.

- Agent D: The Validator – Acting as a quality gate, this agent runs test cases, checks output fidelity, and evaluates whether the migrated code aligns with the original system’s functional and non-functional requirements. It can also simulate runtime behaviors to catch edge-case regressions.

- Agent E: The Documenter – This agent synthesizes technical documentation, diagrams, and audit reports. It aligns code changes with compliance mandates like HIPAA, FedNow, and Basel IV—ensuring that modernization doesn’t create blind spots in governance.

This division of labor mimics a high-performing engineering team, but at AI scale. Each agent brings depth and precision to its task, while their collaboration ensures a coherent, traceable transformation process. The result is not just translated code, but a living, maintainable system ready for the future.

When One Model Isn’t Enough: The Limits of Single-Agent AI

The promise of generative AI has lured many organizations into believing that a single large language model (LLM) can manage their entire modernization journey. But legacy systems—especially in regulated enterprises—are not linear translation problems. They are dense, interwoven systems that reflect decades of operational, regulatory, and technical evolution. Attempting to modernize these systems using a single-agent AI model is like assigning one engineer to redesign an entire power grid—risky, inefficient, and fundamentally flawed.

Single-agent models face three critical limitations in this context:

- Cognitive Overload: Legacy environments involve multiple layers—code syntax, business logic, data schemas, batch workflows, and compliance constraints. Expecting a single model to process and reliably reason through all of these layers leads to output that is shallow at best, and dangerously incorrect at worst.

- Lack of Explainability: AI models used in isolation often function as black boxes. For CIOs and CROs responsible for audit trails, explainable AI isn’t optional—it’s mandatory. A single-agent model provides little transparency into how decisions are made or whether regulatory criteria were considered.

- No Built-in Redundancy or Validation: In a single-agent setup, the same system that generates a transformation is responsible for verifying its own accuracy. This violates basic software engineering principles. Without a second agent (or human) validating outputs, organizations risk embedding subtle but critical errors into their modern systems.

These limitations are not just technical—they’re strategic. They put regulatory compliance, operational continuity, and modernization ROI at risk. Enterprises need an approach that’s modular, verifiable, and scalable. That’s why the future lies in multi-agent systems, where each component can specialize, collaborate, and be independently audited.

Orchestrating Modernization: Inside a Multi-Agent Pipeline

A multi-agent AI pipeline functions much like a digital assembly line, with each agent performing a focused, high-value task in sequence—or, where possible, in parallel. The orchestration layer sits at the center of this architecture, coordinating agent interactions, tracking states, and enforcing execution logic. This is where frameworks like LangGraph, AutoGen, and CrewAI become foundational, enabling complex workflows to be managed with precision and resilience.

Here’s how such a pipeline typically unfolds in a modernization context:

- Ingestion and Parsing: The parser agent kicks off the process, ingesting raw legacy code and producing a structured representation—usually an AST enriched with metadata. This provides the foundational “source of truth” for all subsequent analysis.

- Business Rule Extraction: Next, the rule extractor agent identifies domain-specific logic embedded in conditionals, loops, and hard-coded constants. It may leverage historical usage data or documentation artifacts to enhance context. This stage is critical for ensuring business continuity post-modernization.

- Code Transformation: The rewriter agent receives both structural (AST) and semantic (rules) inputs. It generates modern code aligned to enterprise language standards (Java, JavaScript, C#), architectural styles (REST APIs, microservices), and security requirements.

- Validation and Testing: The validator agent conducts regression testing, code linting, and runtime simulations. It flags anomalies and verifies that the refactored application matches the behavior and outputs of the legacy system under realistic conditions.

- Documentation and Compliance: Finally, the documenter agent compiles everything into technical documentation, architectural diagrams, and audit-ready reports. It ensures traceability from legacy logic to modern implementation, often aligning with standards like HIPAA, Basel IV, or NIST 800-53.

This orchestrated workflow doesn’t just enable scale—it enforces discipline. Each stage produces outputs that are explainable, auditable, and reusable. For large enterprises, this translates into faster modernization cycles, lower operational risk, and systems that are both future-ready and regulation-compliant.

Audit-Ready Automation: Traceability and Compliance at Scale

For enterprises in regulated industries, modernization isn’t just about technical feasibility—it’s about auditability. Every change must be traceable. Every business rule must be preserved. And every line of code must be defensible under scrutiny from compliance teams, regulators, and auditors. This is where multi-agent AI systems offer a transformative advantage.

Unlike monolithic AI models that obscure how decisions are made, multi-agent systems generate step-by-step reasoning at every stage. Each agent logs its actions, rationales, and outcomes, creating a detailed audit trail. This traceability ensures that enterprises can demonstrate compliance with regulations like HIPAA (healthcare), Basel IV (banking), or PCI-DSS (payments). It also allows for human-in-the-loop intervention when edge cases arise, giving teams confidence that no critical logic is lost in translation.

Moreover, because agents are modular and replaceable, systems can evolve without revalidating the entire modernization process. If regulations shift or internal policies change, updates can be scoped to specific agents—like swapping in a new compliance engine—without breaking the entire pipeline.

Audit-ready automation isn’t just a feature; it’s a necessity. In an era where regulatory scrutiny and digital transformation are converging, multi-agent AI enables enterprises to move fast—without losing control.

From Mainframe to Microservices: CodeAura’s Agent-Powered Vision

CodeAura’s approach to modernization is rooted in the recognition that legacy systems are not just technical artifacts—they’re institutional memory, regulatory frameworks, and operational blueprints encoded in obsolete languages. To modernize them successfully, enterprises need more than code converters—they need intelligent systems that understand, collaborate, and evolve. That’s where CodeAura’s agent-based architecture shines.

At the core of CodeAura’s platform is a modular pipeline of AI agents—each responsible for a distinct function in the modernization journey. This includes:

- COBOL, PL/I, RPG, and Delphi parsing agents that create deep structural representations of legacy systems.

- Business logic extraction agents trained to recognize regulatory constructs and domain-specific workflows.

- Code transformation agents that output modern, enterprise-grade code in languages like Java and JavaScript.

- Validation agents that simulate business scenarios, run test suites, and benchmark against performance metrics.

- Documentation agents that produce flowcharts, interaction diagrams, and compliance reports aligned with standards like HIPAA and Basel IV.

What sets CodeAura apart is its emphasis on incremental modernization. Rather than attempting risky, big-bang rewrites, CodeAura supports targeted transformations—updating high-risk modules first, integrating modern components with legacy systems, and progressively transitioning toward microservices or cloud-native architectures.

This agent-based strategy is deeply aligned with real-world enterprise constraints. It respects existing systems, minimizes disruption, and enables modernization at a sustainable pace. Developers can interact with individual agents via familiar tools like Slack, JIRA, or CLI interfaces, while business leaders gain dashboards that surface regulatory and operational insights in real time.

In effect, CodeAura transforms modernization from a massive, one-off project into a manageable, ongoing capability. It’s not just legacy transformation—it’s operational evolution powered by intelligent collaboration.

Modernization That Moves: Turning Static Systems into Living Infrastructure

Legacy systems are often described as “black boxes”—static, fragile, and poorly understood. But in a modern enterprise, infrastructure must be dynamic: capable of adapting to change, integrating with cloud services, and evolving alongside shifting business and regulatory landscapes. Multi-agent AI systems offer the blueprint for this shift—from rigid, monolithic systems to living, adaptive architectures.

With a multi-agent framework in place, modernization becomes a continuous process rather than a one-time overhaul. New business rules can be encoded and propagated without refactoring entire systems. Updated compliance requirements can trigger agent-driven audits and remediations. Performance issues can be isolated to specific modules and addressed without rewriting stable components.

This agility fundamentally changes the modernization ROI equation. Instead of investing tens of millions into multi-year migrations, enterprises can incrementally modernize the most critical—and most risky—parts of their stack first. Legacy and modern systems coexist in a hybrid state, with AI agents facilitating communication, documentation, and quality control across the boundary.

For organizations staring down decades of technical debt, this is a strategic turning point. CodeAura’s agent-powered model doesn’t just help modernize code—it helps modernize capability. Enterprises gain systems that are not only up to date, but designed to stay that way.

If your legacy codebase is a tangled mess, maybe it’s time to call in a team—not of consultants, but of intelligent agents built to work together, move fast, and keep your infrastructure alive.

Let’s Talk About Your COBOL Documentation and Modernization Needs — Schedule a session with CodeAura today.